Just for grins, I wanted to save my nearly 200 blog posts and provide them to a chat bot to see what it might analyze about my posts or themes. Most chat bots can ingest PDF files, but could I turn all this site’s posts into a PDF easily?

This project has no purpose, I just wanted to see if it could be done. I didn’t do any deep research, I just jumped in and started hacking at it any way I could. Here’s how it went down.

While I initially considered getting my posts’ contents from the site’s SQL database, that text content would be pure HTML. Plus, it would be so much cooler if my document had all the original page images and layouts. So instead, I looked at printing every page to a PDF file, and then combining them into a single PDF file. So then, this is basically a matter of getting a list of every blog post URL address, and having my browser visit each one in sequence and print-to-PDF. Should be easy enough.

To get a list of websites, I used my blog sitemap.xml file (specifically the posts file). I simply copied the entire table and pasted it into Excel, keeping only the URL column and saving it as a CSV text file on my desktop.

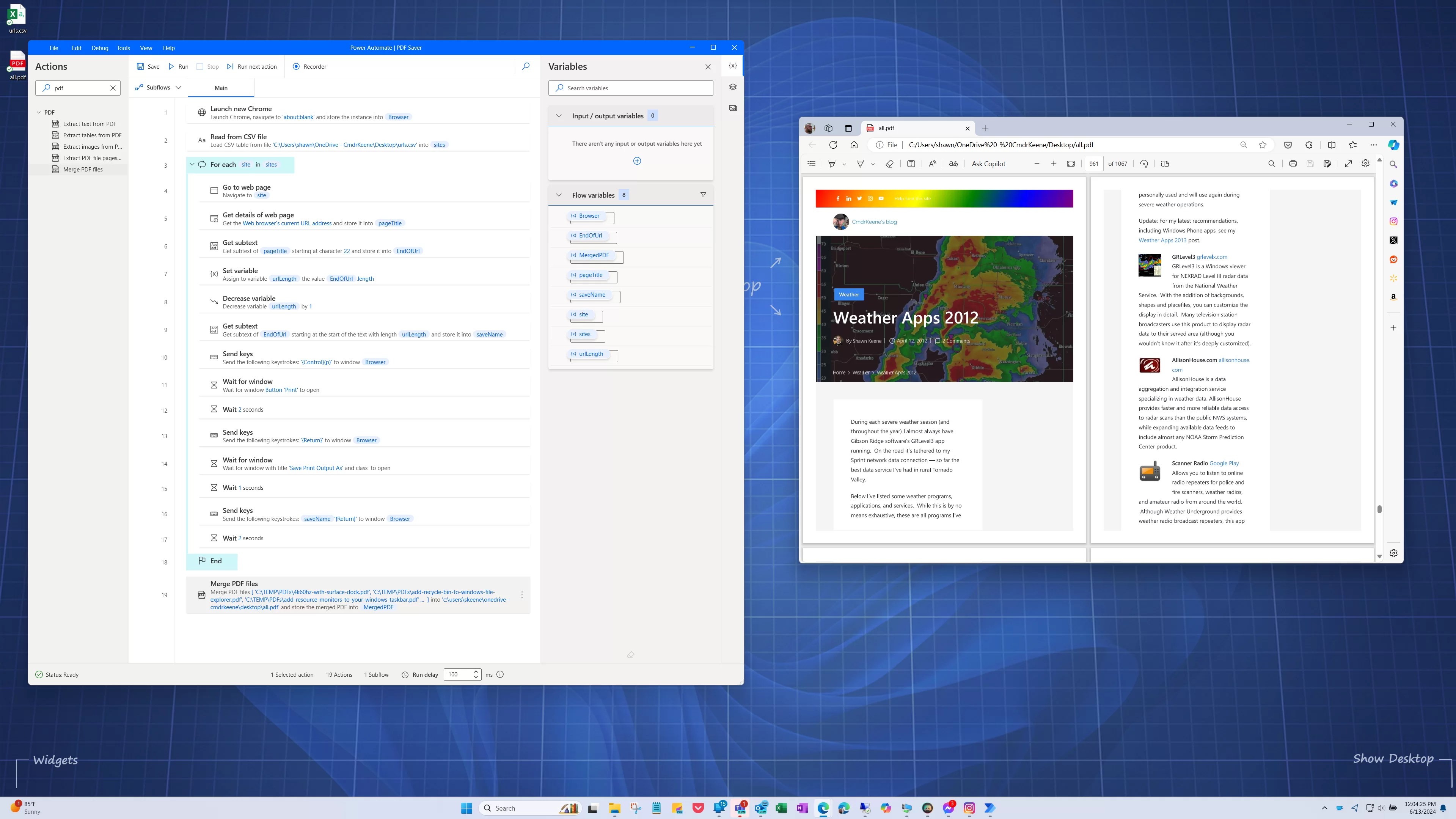

In Power Automate desktop, I created a flow that took these steps:

- Open a browser and assign it a name we can reference later (I used “Browser”. Creative, I know).

- Read the contents of the CSV file into a variable called “sites” (should have called it pages or posts, really… it’s all the same website).

- For each site (post) in the sites (posts) list:

- Have the Browser to go to that URL.

- Have the browser extract out the bit of the URL that follows the “.com/” and save it as a variable we can use to name our PDF file later. A few steps here help accomplish this by finding the length of the URL text and then cutting out the right portion.

- I realize I could have used the text of the site URL without asking the browser to get that text again (it’s obviously the same URL in my CSV file). Heck, my CSV could have even had a 2nd column with a friendly name to use for storing each page, but I don’t intend to keep the individual files anyway.

- Send the Ctrl-P key combination to the browser so it opens the Print dialog, wait for the Print button (the default button in the dialog) to appear, then press Enter to print the file to a PDF.

- When the “Save” dialog box appears, send the text retrieved in step 3.2 above, then press Enter to save the file.

- Return to step 3.1 and repeat for the next URL in the CSV text file.

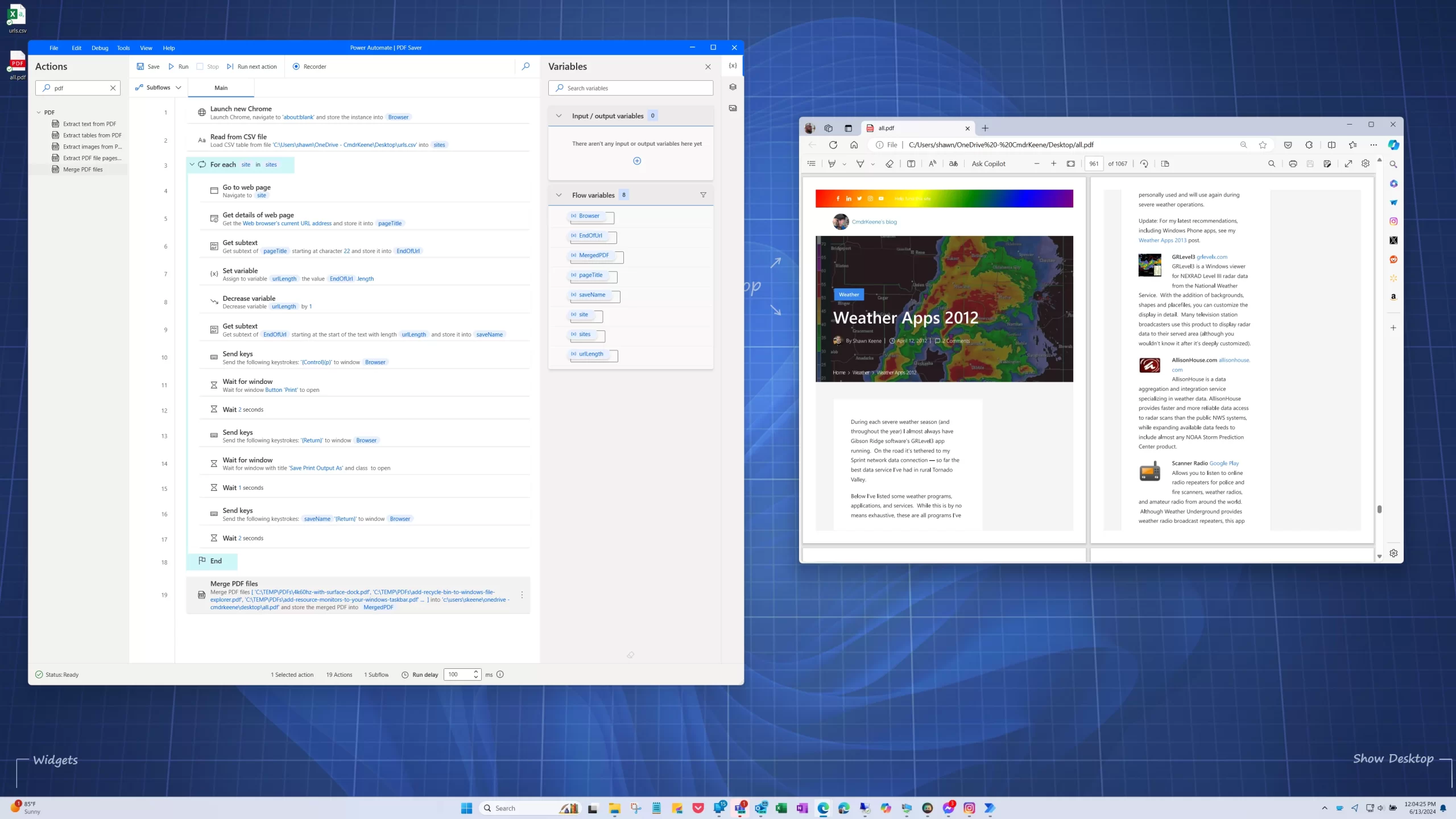

Finally, I used the Power Automate “Merge PDF” files action to instantly combine all of these files into a single All.pdf file, more than 1060 pages long.

And wouldn’t you know it, the resulting 253 MB PDF file is too large to attach to a Copilot query and ask questions against. D’oh.

I’ll probably delete everything from this exploration anyway, it was just to see if this could be done. The flow took about 20 minutes to build and took about 50 minutes to visit all 191 pages and save the PDFs.

Related reading: Many years ago, I did something similar Automating IE With PowerShell to Capture Screenshots of thousands of website homepages.

Leave a Reply